Self hosting BitWarden on Google Cloud

Today, I spent some time deploying BitWarden on Google Cloud Platform (GCP). I wrote up some notes on the process I followed in case they might be helpful for anyone else.

A few warnings…

- Most individuals and organizations should not self host password manager infrastructure. Unless you have deep I.T. Security and Cloud Infrastructure operations experience, attempting to self host your password manager infrastructure is likely to make you meaningfully less secure.

- As of late December, 2022 the BitWarden unified hosting option is still in beta.. Following these directions before this becomes generally available (GA) is inherently dangerous. Currently, I’d suggest doing this only as a proof of concept while waiting for this option to become GA.

What’s the potential value of self hosting BitWarden?

There are several reasons why self hosting BitWarden might be a good choice for people or organizations who have specific security needs and the skills to implement and manage the deployment properly.

- The storage of your encrypted password vaults will be under your control. You might be able to control access to those more tightly than another provider would.

- You may also be able to limit network access to the password management backend infrastructure. Services like LastPass, BitWarden and others need to be accessible to the entire Internet. Your organization might be able to restrict network access more tightly. By doing so, you may be able to reduce the attack surface an attacker can target.

What is needed to host BitWarden (unified deployment option) in Google Cloud Platform (GCP)?

Our deployment on GCP makes use of the following GCP services.

- Artifact Registry - This is used to store the BitWarden container image.

- Cloud Armor - This is used to limit network access to only IPs we specify.

- Cloud Load Balancing - This is used for TLS termination and automatic certificate renewal.

- Cloud Run - This is used as the serverless compute infrastructure to run the BitWarden container.

- Cloud SQL - This is used for data persistence.

- Secret Manager - This is used to store secret values needed by the application in a secure manner.

- Serverless VPC Access - This is used to provide a way for the containers running in Cloud Run to access resources that are only exposed on the internal VPC - such as Cloud SQL.

Requirements You will need several tools installed on your local machine in order to complete this process.

- Docker

- Google Cloud SDK

- A GCP account that is able to create a new GCP project.

- An SMTP account that can be used to send email. I’m using Sendgrid for this.

- The ability to create a new DNS host name and point it to a new IP.

Overview

This post will not provide step by step, detailed directions on how to configure all of these services. I am going to provide a high level overview of what I did, with notes on particular items I think are worth you being aware of. I’ll also try to provide some links to resources that may be helpful along the way.

Step 1: GCP Project / Account Security / Region Selection

- If possible, I’d suggest deploying this in a dedicated GCP project. Projects on GCP serve as a meaningful security boundary. I’d suggest deploying this in a dedicated project in order to isolate it from anything else you may have running in other projects on GCP.

- Ideally, any accounts with privileged access to the new GCP project should be enrolled in the Google Advanced Protection Program. In order to do this, your accounts will need to be secured with FIDO security keys. This helps assure that authentication related to the underlying GCP resources used during the rest of this deployment is very strong.

- Choose any one GCP region to deploy all of this to. I used us-central1. Any region is fine, just be sure to deploy everything to that same region in order to avoid unnecessary latency between services etc.

Step 2: Service Accounts

I’d suggest using a couple of service accounts for this deployment. I’d create them as follows.

Docker

- This account will be used to push the BitWarden container to Artifact Registry.

- Configure this account with the “Artifact Registry Writer” role at the project level.

- Be sure to grant the GCP account you are going to use to push the Docker container to Artifact Registry with the “Service Account User” and “Service Account Token Creator” rights on this account.

- Create a set of keys (json format) for this account. Download and store those in a secure location.

CloudRun

- By default, Cloud Run services run as a default account that is too highly privileged. If you create an IAM service account, with no roles and permissions, you can run the service we deploy as that account. This is a more secure place to start. In this case, those containers don’t need any permissions to GCP APIs anyway.

Step 3: Artifact Registry

Next, we simply need to get the Docker container for BitWarden up to Artifact Registry so that we can deploy a Cloud Run service that uses it.

- Enable Artifact Registry

- Create a new Artifact Registry repository

- Authenticate - I used the process described here. This uses the key created for the Docker service account above to authenticate you to Artifact Registry.

- Pull the BitWarden image down from Docker. If you happen to be on an Apple silicon based Mac (M1 / M2 etc), be sure you pull the amd64 version, not the arm64 version. At this point, Cloud Run does not support arm64.

- Tag the image.

- Push the image up to your new repository on Artifact Registry.

Step 4: Cloud SQL

Next, we need a place for BitWarden to be able to durably store the data it needs to run. That place is Cloud SQL.

- I used the PostgreSQL 14 flavor of Cloud SQL. However, BitWarden supports several possible database choices.

- I’d recommend only allowing your CloudSQL instance to have internal (private IP) connectivity.

- Make sure you make availability and backup choices that are appropriate for your use case.

- Set a highly complex password on the postgres user account. Store it securely, we will need it in just a bit.

Step 5: Setup a Serverless VPC Access Connector

Next, we need to add a Serverless VPC Access Connector. This will enable our serverless container running on Cloud Run to interact with network resources - like our Cloud SQL server - which are hosted on our VPC. This prevents us needing to expose Cloud SQL with an external IP.

Documentation on how to create this can be found here.

Step 6: Setup secrets in Secret Manager

Now we need to create secrets and store them securely in Secret Manager. We will expose these to the Cloud Run container as environment variables soon.

- The password for the postgres account used with Cloud SQL.

- Your SendGrid API key (if you are using SendGrid for SMTP) or SMTP password if you are using something else.

I have seen issues previously with secrets that are not stored in the same region as the Cloud Run instances. So, I’d recommend manually choosing your secret storage locations. Make sure they include the region you are planning to deploy the Cloud Run instance to.

Step 7: Setup a Cloud Run Service Up next, it’s time to deploy our service on Cloud Run. Here are some notes.

General:

-

Use the container image we uploaded to Artifact Registry earlier.

-

I set “CPU is always allocated” with min and max instances set to 1. Ultimately, I am not sure how well BitWarden would scale to zero or scale out to multiple containers. If I get time, I’d like to come back and explore that a bit. I also used 2GB of RAM and 1 CPU core. That seemed to work well for my testing. Obviously, you can scale this up as needed. Cloud Run has great monitoring that can help identify if more resources are needed.

-

Connections: Connect the containers to your VPC by choosing the Serverless VPC Access Connector we setup above.

-

Security: Select the service account we created to run the containers as. This prevents them from running as the overly privileged default service account.

-

Deploy the Cloud Run service so that it is only available on the internal VPC or through Cloud Load Balancing. This will allow us to use Cloud Armor to restrict access by source IP.

Environment Variables:

I used the following environment variables to expose non-secret settings to the containers. These are all explained well here.

- BW_DOMAIN

- BW_DB_PROVIDER

- BW_DB_SERVER

- BW_DB_DATABASE

- BW_DB_USERNAME

- BW_INSTALLATION_ID

- BW_INSTALLATION_KEY

- globalSettings__mail__replyToEmail

- globalSettings__mail__smtp__host

- globalSettings__mail__smtp__port

- globalSettings__mail__smtp__ssl

- globalSettings__mail__smtp__username

- adminSettings__admins

Note - GCP blocks port 25 outbound by default. You can get this block removed, but it’s quite a process. So, you globalSettings__mail__smtp__port setting should ideally use port 587 or another port that your provider supports.

Secrets:

I exposed some of the secret values we stored in Secret Manager earlier to the container as environment variables.

- globalSettings__mail__smtp__password

- BW_DB_PASSWORD

Intermission

This is a good place to pause and review the Cloud Run logs. If all is well, you should have a working (green) service on Cloud Run. If not, I’d stop and troubleshoot that now. Once you are ready, we will continue below and get some external connectivity wired up.

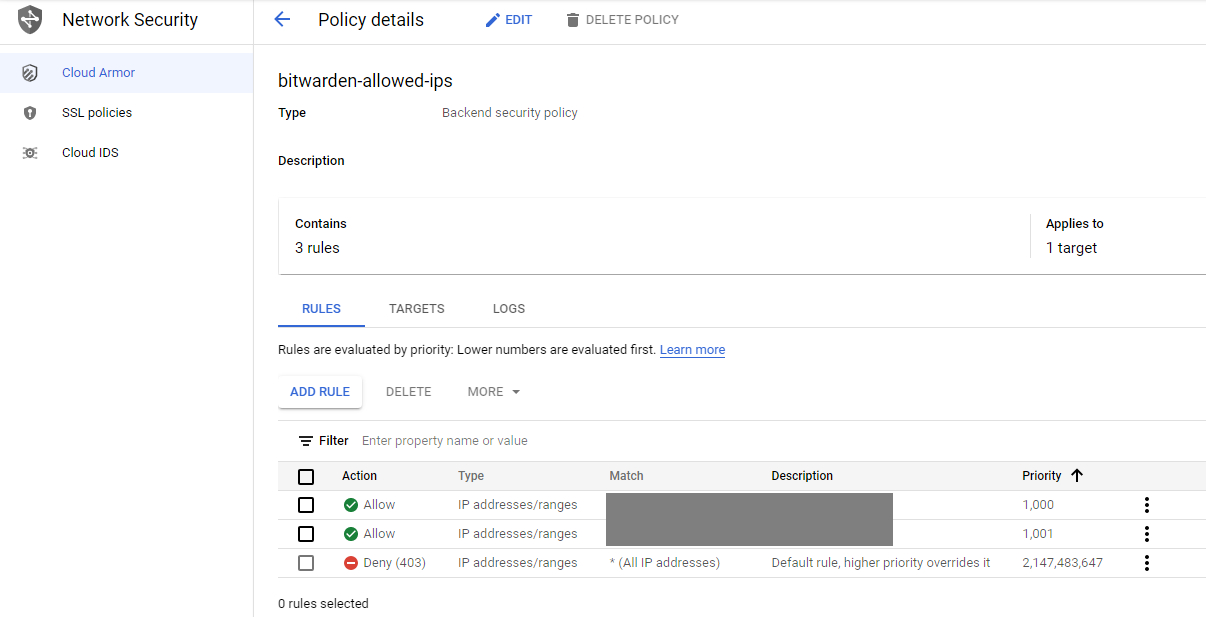

Step 8: Setup Cloud Armor

Assuming you want to restrict traffic to only allowed IPs, you should go create a new Cloud Armor policy. I’d default it to blocking all traffic that does not come from IP address blocks that you specify. This reduces the attack surface for the BitWarden self hosted infrastructure.

Reference this link for an overview of Cloud Armor if needed.

Step 9: SSL Policies

The default GCP SSL policy is intended to be highly compatible, even with older clients that don’t support modern TLS. Typically when discussing weak ciphers on a scan or report, I tend to be the one saying: “This is not how the bad guys get you.” However, when it comes to your password manager communicating with its back end infrastructure, I think anything even slightly weak is pretty inexcusable. So, just create a new policy and set min TLS to 1.2 and profile to Restricted. That will land you in a pretty good place.

Reference this link for an overview if needed.

Step 10: Load Balancer

It’s finally time to expose our service to the web! Well, at least the parts of the web allowed in your Cloud Armor policy. Creating a Load Balancer is somewhat involved. Reference this link for documentation as needed.

- Create a new HTTPS Global Load Balancer

- Reserve a static IP for your front end. Choose the SSL policy we created above.

- Create a new serverless backend that points to your Cloud Run service. Integrate your Cloud Armor policy with this to restrict access to only IPs you allow.

- Get a GCP managed certificate for the hostname you want to use for this service. This host name should match what you specified in the BW_DOMAIN environment variable above. Note, after you point DNS below, it takes some time for this certificate to get created and go live.

Step 11: Add a DNS host name to your DNS

The last deployment step is to point the hostname you are going to use with BitWarden to your load balancer front end IP in DNS.

BitWarden UI

Assuming all has gone well, you should now be able to access your self hosted BitWarden user interface using the URL you chose. Configuration of BitWarden is out of scope for this post. Please refer to the BitWarden documentation - which is excellent.

BitWarden Admin UI

If all is well, and your SMTP account is working as expected you should also be able to access the Admin UI.

I noticed that in my case, accessing the Admin UI using https://bw.domain.com/admin seemed to redirect to the non-https URL http://bw.domain.com/admin/login?returnUrl=%2Fadmin. This may be a bug. It may also be because I am terminating SSL / TLS on the GCP load balancer. Simply adding the https to the link manually solves this.

Questions / Comments / Corrections

Obviously, I can’t help everyone setup their own self hosted BitWarden instances on GCP for free. However, if you find an error in what I’ve written here, or you think it would be helpful for me to add some specifics to any particular step, let me know and I’ll consider that. Feel free to reach out using the tiny icons at the very bottom of this page. Thanks for reading!